Ever since the introduction of Java Streams, a lot of everyday loop code moved into stream pipelines. Streams are very readable and provide a rich set of operations for transforming data. And for large datasets, the performance difference between a well-written stream pipeline and a classic loop is often negligible.

However, it’s extremely important to be mindful of the costs streams can hide. A pipeline can look harmless and still hide serious performance issues.

In this blog post we look at one of those patterns: using wrapper types (e.g., Integer, Long, Double) in numeric stream pipelines, where continuous boxing/unboxing can add unnecessary overhead and quietly push extra work onto the heap and GC.

A numeric stream pipeline

Let us consider an example where you are processing a large dataset of order subtotals. In most systems this data comes from a database or an ORM layer, so it is very common for it to be represented as wrapper values like List<Long>:

List<Long> subtotals = repo.loadSubtotals();

Assume we need to apply a simple rule:

- keep only orders with subtotal ≥ 50_000

- qualifying orders get a 5% discount

- compute the total payable amount for qualifying orders

Scenario 1 : The common wrapper pipeline

We define a simple stream pipeline that does this:

long totalPayable = subtotals.stream()

.filter(t -> t >= 50_000L) // unboxing for comparison

.map(t -> (t * 95) / 100) // unbox to compute, then box result back to Long

.mapToLong(Long::longValue) // unboxing again for sum

.sum();

In this case, it’s important to note that we are still working with a Stream<Long>, not a primitive LongStream. To perform the filter comparison, the JVM must unbox each Long into a long so it can evaluate t >= 50_000L.

The map stage, .map(t -> (t * 95) / 100), also forces an unbox to perform the arithmetic, and then it must box the result back into a Long to keep the pipeline as a Stream. This introduces additional wrapper overhead and may create more pressure on the heap.

Finally, to apply the terminal sum operation, we need a primitive pipeline, so we use mapToLong(Long::longValue), which performs unboxing again. We could use reduce here, but the issue stays the same: we are keeping a wrapper pipeline even though all we are doing is a numeric calculation that works on primitives.

Scenario 2 : A primitive pipeline

The alternative is to convert to a primitive stream as early as possible and keep the rest of the pipeline primitive. Since our input is a List<Long>, we can do that by switching to a LongStream immediately with mapToLong:

long totalPayable = subtotals.stream()

.mapToLong(Long::longValue) // unbox once: Stream<Long> -> LongStream

.filter(t -> t >= 50_000L) // primitive comparison

.map(t -> (t * 95) / 100) // primitive arithmetic

.sum(); // terminal sum on primitivesThe logic is identical, but the pipeline is very different. After the first line we are no longer dealing with wrapper objects. The filter and map stages now operate on primitive long values, so there is no need to box intermediate results back into Long objects just to keep the stream going.

In practice, this means the JVM does the unboxing cost once at the boundary, and then the rest of the pipeline stays in primitive form. On large datasets, this often reduces wrapper overhead, lowers heap pressure, and makes the pipeline easier for the JVM to optimize.

Performance Test

In this section, we are going to use JMH to run a proper performance test. If you want more background on the setup and how JMH works, you can read more here:

In our code , both benchmarks run the same logic on a List<Long> with 1M and 5M elements. Here is the code for the performance test:

import org.openjdk.jmh.annotations.*;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.MILLISECONDS)

@Warmup(iterations = 5, time = 1)

@Measurement(iterations = 7, time = 1)

@Fork(2)

@State(Scope.Thread)

public class WrapperVsPrimitiveStreamBench {

@Param({"1000000", "5000000"})

int size;

List<Long> values;

@Setup(Level.Trial)

public void setup() {

values = new ArrayList<>(size);

for (int i = 0; i < size; i++) {

values.add(10_000L + (i * 37L));

}

}

@Benchmark

public long wrapperPipeline() {

return values.stream()

.filter(v -> v >= 50_000L)

.map(v -> (v * 95) / 100)

.mapToLong(Long::longValue)

.sum();

}

@Benchmark

public long primitivePipeline() {

return values.stream()

.mapToLong(Long::longValue)

.filter(v -> v >= 50_000L)

.map(v -> (v * 95) / 100)

.sum();

}

}Run mvn clean install to build the project, then run the benchmark with: java -jar target/*.jar WrapperVsPrimitiveStreamBench -prof gc

Results

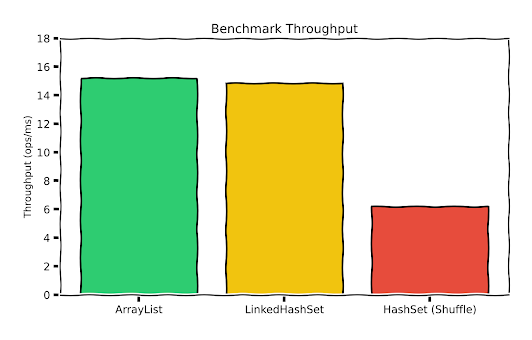

JMH results (with -prof gc)

| size | pipeline | time (ms/op) | alloc (B/op) | alloc (MiB/op) | GC count |

|---|---|---|---|---|---|

| 1,000,000 | primitivePipeline | 6.214 | 458.630 | 0.000 | ≈ 0 |

| 1,000,000 | wrapperPipeline | 17.086 | 23,974,565.502 | 22.864 | 63 |

| 5,000,000 | primitivePipeline | 31.513 | 628.366 | 0.001 | ≈ 0 |

| 5,000,000 | wrapperPipeline | 90.486 | 119,975,046.173 | 114.417 | 44 |

From the table, the results are just as we expected.

- Runtime: wrapper pipeline is ~2.75x slower at 1M, and ~2.87x slower at 5M.

- Allocation: wrapper pipeline allocates ~22.9 MiB/op at 1M, and ~114.4 MiB/op at 5M, while the primitive pipeline stays near zero allocations.

- GC: wrapper pipeline triggers frequent GC cycles during measurement; primitive pipeline basically does not.

Conclusion

As we have seen in this post, if you are doing numeric work in streams, avoid keeping the pipeline on wrapper types. In our benchmark, the wrapper pipeline was ~3× slower and allocated tens to hundreds of MB per operation, which caused frequent GC. Converting once to a primitive stream (mapToLong, mapToInt, mapToDouble) kept allocations near zero and removed the GC noise, which can result in much better throughput and lower latency on large datasets.